The Docker containerization technology has greatly transformed the way we build, deploy, and manage applications. With Docker, you can bundle up code alongside all its dependencies as autonomous, portable container units—ensuring your application performs reliably and consistently from one computing platform to the other.

Images are at the heart of Docker technology. A Docker image contains a collection of read-only files (or layers) that amasses all the necessities—such as system tools, libraries, code, and other dependencies—required to launch a fully operational container environment. It’s an executable software package that comes with instructions for setting up containers.

To create a container image, you need to use a Dockerfile—a simple text document containing a set of instructions for assembling an image. Dockerfile allows you to define the dependencies required to create an image.

By properly managing Docker dependencies, you can streamline your software development process and make the most of the revolutionary containerization technology.

This blog post talks about some best practices for Docker dependency management:

1. Enforce Maintainability

Ensuring your dependencies are easy to maintain is critical to the well-being of your containerized application. Delivering images that are readable, efficient, and maintainable can take your Docker development efforts to the next level.

Let’s illustrate some ways of enforcing the maintainability of your dependencies.

a) Use Specific Image Tags

A tag is usually used to reference the version of a Docker image. When selecting the base image for your Docker application, it’s recommended to go for a specific tag as much as possible.

While using the latest tag could be fancy, it can introduce breaking changes over time and impair the maintainability of your application. The latest tag is also the default tag used when you do not specify one in your Docker commands.

The latest tag is rolling—if you use that tag, it implies the underlying image version can change to reflect the ‘latest’ changes. This may lead to non-deterministic side effects when rebuilding the image later.

By using a specific image tag, you get a deterministic behavior with your Docker dependencies. If you’re familiar with npm dependency management, it’s the same deterministic behavior we want to achieve, just like when using lock files during npm install.

Here is an example of a Dockerfile with a specific image tag:

FROM node:16.2.0-alpine WORKDIR /app COPY . . RUN npm install EXPOSE 8080 CMD ["node", "app.js"]

b) Sort Multi-Line Arguments

Sorting multi-line arguments alphanumerically will enhance the developer experience. It eases implementing later changes, prevents duplication of packages, and improves code readability.

Here is an example:

RUN apt-get -y update && \

apt-get install -y apache2 \

git \

golang \

python

c) Decouple Applications

It’s recommended to have one concern per container. If your applications are decoupled into multiple containers, it can improve the overall container management process.

Limiting every container to a single concern can help keep your application clean and modular. It will make managing your dependencies easy and hassle-free.

Suppose you’ve deployed a MEAN containerized application. In that case, it should comprise four different containers, each with its own distinct image—one for MongoDB, one for Express.js, one for Angular, and one for Node.js.

d) Use .dockerignore File

A .dockerignore file is equivalent to the .gitignore file. You can use the .dockerignore file to explicitly exclude certain files, directories, or dependencies from the image building process.

With a .dockerignore file, you can improve the build performance and ease the maintainability of your application.

2. Keep Your Images Small

The image size is critical in determining the performance of your containerized application. By keeping images small, you can efficiently manage Docker dependencies.

Small images lead to faster deployments and optimal performance of your applications. They also help in improving security by reducing the attack surface area.

Let’s point out some ways of optimizing the size of your images.

a) Avoid Installing Unnecessary Dependencies

If you install packages just for the sake of it, you’ll increase your build time and impair the overall performance. For example, adding a text editor in a database image will result in needless complexity.

You can also set up the apt package manager to avoid installing redundant dependencies. To achieve that, add the –no-install-recommends flag on the apt-get calls.

Here is an example:

RUN apt-get -y install --no-install-recommends <package-name>

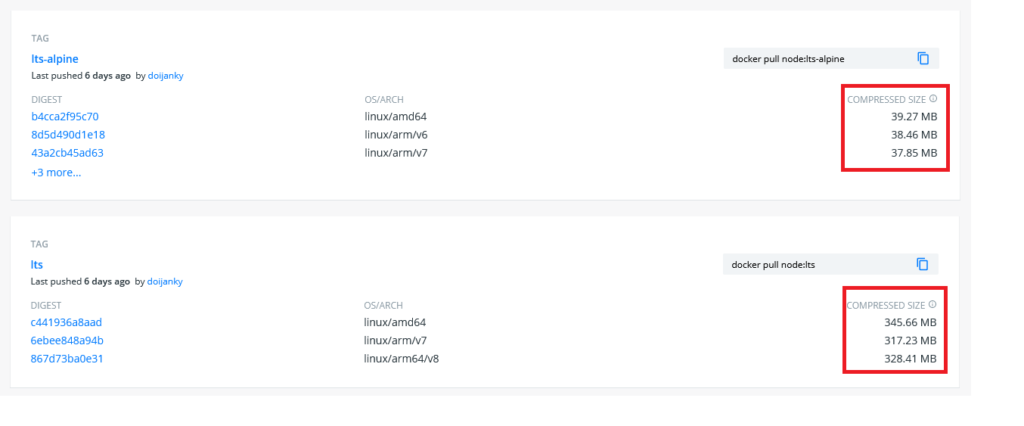

b) Choose Base Image With Smallest Size

The base image, or the parent image, is the initial layer defined by the FROM instruction in the Dockerfile. The subsequent instructions that reference other dependencies build on top of the parent image. If the parent image is lightweight, the resulting image will also be lightweight, making it quicker to download.

For example, going for Alpine-based flavored images could drastically reduce your image size and give you performance enhancements in most cases. As you can see below, using the lts-alpine version instead of lts reduces the parent image size by nearly nine times:

c) Reduce The Number Of Layers

Since every instruction in the Dockerfile creates an additional layer in the image, ensuring the instructions are minimal improves build performance.

For example, instead of including multiple RUN commands in the Dockerfile, you can consolidate them into a single line.

Here is an example:

RUN apt-get -y update RUN apt-get install -y python

Let’s combine the commands:

RUN apt-get -y update && apt-get install -y python

While the first option adds two layers to the image, the second one only adds one. So, using the second option reduces the size of the overall image.

d) Use Multi-Stage Builds

Multi-stage builds is a useful Docker feature that lets you decrease the amount of clutter in your final image. You can use it to create your application in a first “build” container and inject the results in a second container, without the need to shift to another Dockerfile.

This feature enables you to choose the dependencies you want to copy from one stage to the other, ensuring you keep only the ones required in the final image.

Using multi-stage builds is a good practice for reducing the use of build dependencies in the deployed containerized application. It allows you to separate build and runtime environments, which subsequently shrinks the size of the final image.

3. Keep Images Secure

Keeping images secure is an important aspect of Docker dependency management. By maintaining Docker image security throughout the development lifecycle, you can guard your container environment against security flaws and insecure coding practices.

Let’s delve into some ways of keeping your Docker images secure.

a) Scan images

Docker image scanning involves analyzing the contents of an image to discover security vulnerabilities or bad coding practices.

It is a security best practice to continuously scan your images at every step of the development process—from the initial development stage to production. That’s the best way to ensure your containerized environment is safe from weaknesses that could wreak havoc on your application.

You need a specialized tool to effectively scan your images and detect known flaws. With a good scanner, you can constantly analyze your images and fix weaknesses before they bring your application to its knees.

For example, Mend provides a robust end-to-end solution that allows you to keep your containerized environment safe and secure. With the tool, you can continuously discover vulnerabilities throughout the development lifecycle and get real-time alerts whenever a problem is detected. You’ll not need to worry about shipping vulnerable dependencies in your Docker applications.

b) Update frequently

It’s important to update your Docker images frequently. New image updates usually come with patches to the discovered security holes. If you stick to an old version, you could make your containerized application susceptible to malicious attacks.

As pointed out earlier, it’s recommended to use specific image tags when making updates. If you use the latest tag, you could introduce breaking changes to your application.

Proper Docker Dependency Management Takes Effort

Mastering how to optimize your Docker development environment takes time and effort. You need to learn the ropes to ensure your container application delivers the anticipated value.

We hope that the above best practices for managing Docker dependencies have laid the groundwork for harnessing the full power of the container technology.

If you manage dependencies well, you can make the most of the innovative Docker technology and create applications that are performant, secure, and scalable. It’s what you need to improve your development efforts and realize the promise of containerization—code once and run on any platform, irrespective of the scale.