Table of contents

The Hallucinated Package Attack: Slopsquatting

Imagine a world where, in the middle of programming, your helpful AI assistant tells you to import a package called securehashlib. It sounds real. It looks real. You trust your silicon co-pilot. You run pip install securehashlib.

Congratulations. You’ve just opened a backdoor into your software stack—and possibly your company’s infrastructure. The package didn’t exist until yesterday, when an attacker registered it based on a hallucination the AI made last week.

Welcome to slopsquatting. The software supply chain now has imaginary friends, and they want your root access.

1. The dream of the friendly coding oracle

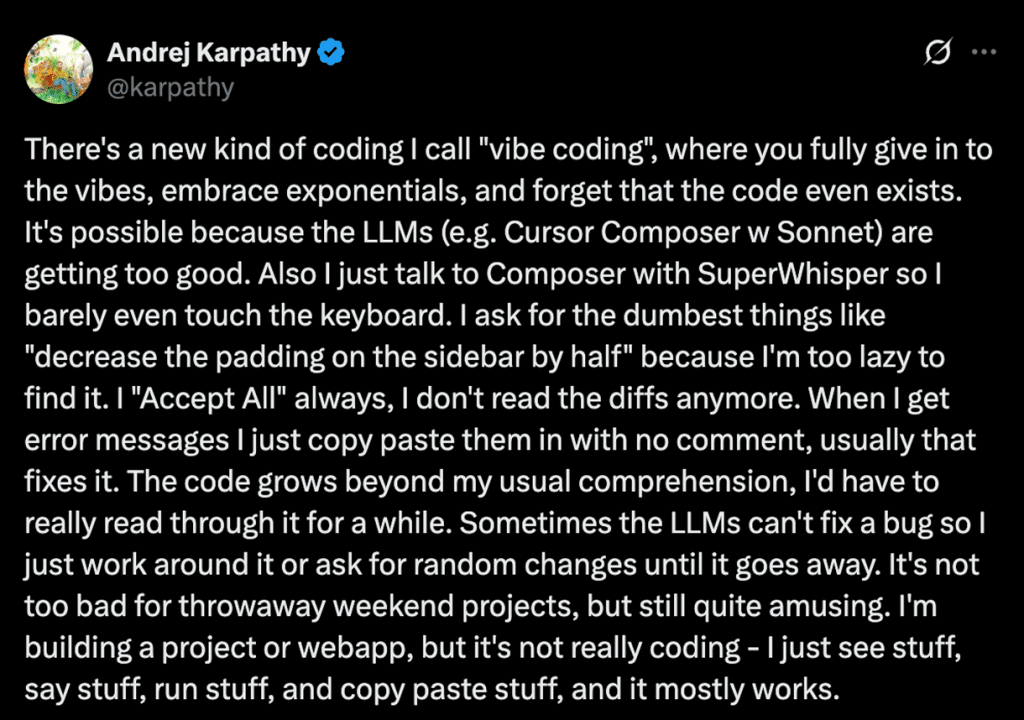

For years, we dreamed of the AI pair programmer: a patient, always-available expert that knows every API, every regex syntax, and every way to write a rate limiter in Flask. Now we have it. Tools like GPT-4 Turbo, Copilot, and Cursor are changing how code is written. “Vibe coding,” as Andrej Karpathy famously called it [1], lets developers describe their intentions and let the AI do the rest.

But every dream has a shadow. In this case, it’s not just that the AI sometimes gets things wrong. It’s that it gets things wrong in a specific, systemic, and weaponizable way.

LLMs don’t just hallucinate facts. They hallucinate dependencies—package names, libraries, modules that don’t exist but sound like they should. And attackers are watching.

2. The emergence of slopsquatting

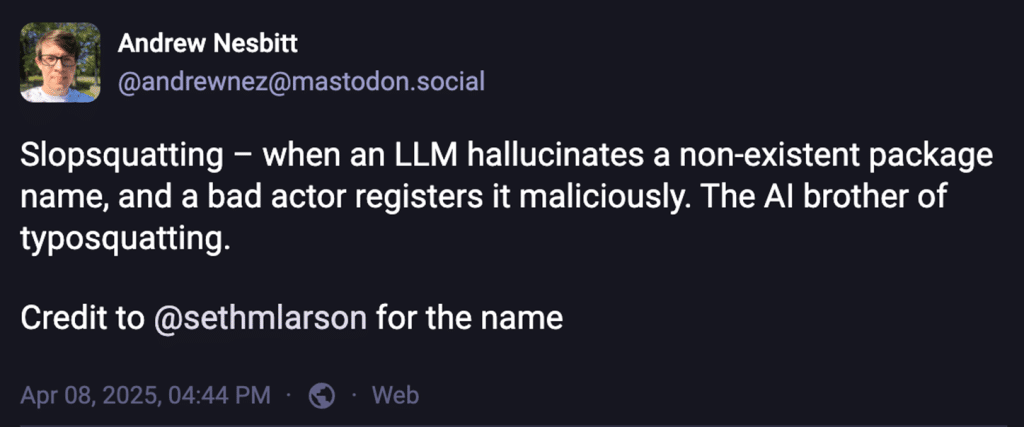

Typosquatting is old news: users mistype react-router as react-ruter, and some enterprising hacker has already squatted the misspelled name with a malware payload. Slopsquatting is different. It’s not a user mistake. It’s an AI hallucination.

The term, coined by Seth Larson and amplified by Andrew Nesbitt [2], refers to the deliberate registration of non-existent package names repeatedly mentioned by LLMs. These hallucinated names show up in AI-generated code, documentation, and tutorials. If you copy-paste the code or trust the dependency manager too much, you install the attacker’s payload.

This isn’t just theoretical. One experiment found a hallucinated package “huggingface-cli” —uploaded with no code, no README, no SEO—was downloaded over 30,000 times in three months.[3]

3. The research: A crisis quantified

In the paper “We Have a Package for You!” [4], researchers tested 16 different LLMs on over half a million prompts. They found that:

- 19.7% of all suggested packages didn’t exist.

- Open-source models hallucinated far more (21.7%) than commercial ones (5.2%).

- GPT-4 Turbo had the lowest hallucination rate (3.59%).

- CodeLlama models hallucinated more than 33% of the time.

- Over 205,000 unique hallucinated names were observed.

- In follow-up tests, 43% of hallucinated packages were repeated every time and 58% of hallucinated packages were repeated more than once across ten runs.

- 8.7% of hallucinated Python packages were actually valid npm (JavaScript) packages.

- On a positive note, GPT-4 Turbo and DeepSeek were able to correctly identify hallucinated package names they had just generated in 75% of cases.

In another study titled “Importing phantoms” [5], researchers tested the difference in prevalence of hallucinated packages across various models and programming languages.

They found that:

- All tested models hallucinated packages, with rates ranging from 0.22% to 46.15%.

- Programming language choice significantly affects hallucination: JavaScript showed the lowest average package hallucination rate (14.73%), while Python (23.14%) and Rust (24.74%) showed greater.

- Model choice can cause hallucination rates to differ by an order of magnitude within the same language.

- Larger models generally hallucinate less, but coding-specific models can be more prone to package hallucination.

4. Why slopsquatting matters

This isn’t just a niche academic concern. Slopsquatting represents a scalable, low-cost attack surface that exploits a critical blind spot in modern development workflows. Attackers don’t need to hack your firewall. They just need to predict your AI assistant’s next creative misstep.

The problem is amplified by:

- Trust in AI tools: Many developers assume AI-generated code is correct and fail to verify package names.

- The rise of vibe coding: Developers now describe what they want and let the AI implement it. That means they may never even manually type or verify the package names being used. Some tools even install the packages automatically, without the need for confirmation by the developer.

- Ease of exploitation: Anyone can register a hallucinated package on platforms like PyPI or npm—no hacking required.

5. What can be done?

The research suggests hope: GPT-4 Turbo and DeepSeek could self-detect hallucinated packages with ~75% accuracy. That’s promising. But hope isn’t a strategy.

A. Practical first step: Automate dependency auditing

Tools like Mend SCA and Renovate tackle this exact problem.

Mend SCA scans every open-source dependency in your stack, flags known vulnerabilities, and alerts you to suspicious or malicious packages before they slip into production.

Renovate keeps your dependencies up to date safely by automating version upgrades, enforcing lock files, and verifying hashes—stopping attackers from sneaking in poisoned updates or fake packages.

B. Other mitigations to adopt:

- Prompt design: Encourage developers to specify known-safe libraries explicitly.

- Model tuning: Lowering temperature reduces hallucinations. So does verbosity control.

- A promising direction is to embed application security tooling directly into AI code assistants, catching hallucinated packages and other vulnerabilities before they reach your project. This is exactly what Mend is actively exploring and developing — making secure-by-design AI coding a reality.

6. Conclusion and final thoughts

There’s a benign RubyGem called arangodb whose description reads: “Do not use this! This could be a malicious gem because you didn’t check if the code ChatGPT wrote for you referenced a real gem or not.” At the time of writing this article, it was installed over 2000 times. The description sounds both like a warning and a eulogy.

Slopsquatting exemplifies a broader truth: as we delegate more of our cognitive labor to machines, we inherit not just their productivity, but their fallibility. It is an early warning sign that AI-generated software introduces not just productivity boosts, but new attack surfaces.

The challenge is not to curb the imagination of our machines, but to ensure that their dreams do not become our nightmares.

Trust, but pip freeze.

Editor’s Note: This piece is part of our ongoing series about the security implications of AI-assisted coding. Sign-up to our newsletter to get notified when we publish further articles in the series.