The New Era of AI-Powered Application Security. Part Two: AI Security Vulnerability and Risk

This is the second part of a three-part blog series on AI-powered application security.

Part One of this series presented the concerns associated with AI technology that challenge traditional application security tools and processes. In this second post, we focus on the security vulnerabilities and risks that AI introduces. Part Three will explore practical strategies and approaches to cope with these challenges.

The Dual Nature of AI Security Risk

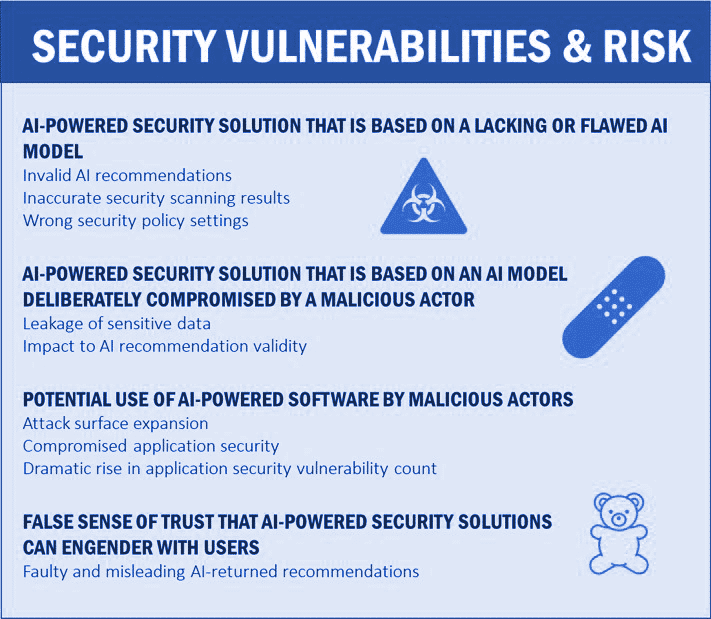

AI-powered tools are particularly vulnerable at the model level. If the model is trained on partial, biased, or compromised data, the system may deliver inaccurate assessments or recommendations. This could include misleading security scan results or inappropriate policy suggestions.

Malicious actors may even deliberately tamper with model data to undermine its integrity. In these cases, AI tools intended to improve security might actually increase risk. It’s also important to remember that traditional software vulnerabilities—such as injection flaws, data leakage, and unauthorized access—still apply to AI-powered systems. In addition, there are entirely new classes of vulnerabilities unique to AI and machine learning models.

AI in the Hands of Malicious Actors

AI is not only a potential point of failure—it is also a powerful tool for attackers. Malicious actors can use AI to discover vulnerabilities faster and more thoroughly than ever before. The scale and speed of these efforts dramatically raise the potential impact of attacks.

One emerging risk involves the exploitation of business logic vulnerabilities caused by weak enforcement of inter-service security policies. These flaws may not be detectable through conventional code analysis or runtime inspection. Instead, they may only emerge when multiple independent components interact in specific, state-dependent ways.

AI can help detect these complex vulnerabilities—but it can also be used to exploit them, making it a double-edged sword.

Risks in AI-Powered Remediation

Without appropriate safeguards, using AI for remediation can be dangerous. Vulnerability fix suggestions generated by AI may inadvertently include malicious code. These embedded threats can be difficult to detect, especially when the AI-generated suggestions appear syntactically correct and plausible.

Overreliance on AI-generated fixes without human review increases the likelihood of such scenarios, particularly in time-pressured development environments.

The False Sense of Trust in AI-Powered Security Tools

One of the more subtle but critical risks involves the illusion of trust. Because AI tools often deliver responses in a confident and fluent manner, users may assume their recommendations are correct and comprehensive.

For example, developers without deep AppSec expertise might rely on AI to generate security advice using natural language prompts. However, if the prompts are vague or imprecise—as they often are—AI may respond with incomplete or inappropriate advice. The result is a misplaced sense of security that could lead to under-protected applications.

Preparing for What Comes Next

How should security teams and development organizations respond to these growing risks?

Reaping the benefits of AI requires a new level of awareness, oversight, and validation. While the evolution of AI-powered application security is still in its early stages, now is the time to assess how this technology introduces risk—and to start defining the controls, review processes, and trust boundaries needed to use it safely.

In the next post, we’ll explore concrete approaches to mitigating AI-related security risks in real-world software development environments.