Table of contents

Why AI Security Tools Are Different and 9 Tools to Know in 2025

As enterprises embed AI into business-critical workflows, security tools built for traditional applications fall short. AI systems introduce new attack surfaces — prompt injection, model theft, data poisoning — that require a dedicated defense strategy. In this article, we explore why AI security tools must differ from legacy tools, and we present nine that are rising to meet the challenge in 2025. This article is part of a series of articles on AI Security.

What are AI security tools?

As companies embed AI models into their applications, they face risks that traditional security tools weren’t designed to catch, such as prompt injection, data leakage, model poisoning, and shadow AI. Addressing these threats requires a new class of security tools built specifically for AI specific risk.

These AI security tools typically focus on three areas: securing the data that trains and powers models, hardening the models themselves against theft and manipulation, and protecting the runtime environment where models interact with users. By covering these dimensions, they help defend against attacks like adversarial inputs, model extraction, and data poisoning.

AI security tools can be applied both pre-deployment, to identify weaknesses early, and in production, to monitor behavior and enforce protections. Many also integrate across the AI development lifecycle, bringing continuous testing, compliance, and governance into the process. The result is greater assurance that AI-powered applications remain secure, resilient, and trustworthy.

AI security tools don’t exist in a vacuum. They sit within a larger landscape of risks and defenses that every CISO and AppSec leader needs to consider. For example, retrieval-augmented generation pipelines introduce unique risks, which we explore in our guide on RAG security. Unauthorized AI connectivity, such as hidden Model Context Protocol integrations, can also expose critical blind spots, as detailed in our post on MCP security.

These risks fall under the broader discipline of generative AI security, which is quickly becoming a board-level priority. As adoption accelerates — see our roundup of generative AI statistics — organizations are realizing that model-level threats like extraction and theft must be addressed head-on. Our guide to AI model security walks through practical strategies.

Beyond the threats, there are solutions. From AI guardrails that constrain model behavior, to AI security solutions that clarify the role of AI in security vs. securing AI itself, and AI security posture management tools that provide visibility across the AI estate — the ecosystem is evolving rapidly.

Why traditional security isn’t enough for AI

AI security requires a different approach because the attack surface of AI applications is different from traditional applications. Models are dynamic and non-deterministic systems whose behavior changes based on input prompts, fine-tuning, and continuous learning. They are also often built on top of third-party APIs or pretrained weights, introducing risks from external sources that may be opaque to security teams.

Once deployed, AI systems can degrade or be manipulated over time without any obvious changes to the codebase, making post-deployment monitoring as critical as pre-deployment testing. Effective protection must address data integrity, model integrity, and inference-time defenses in addition to conventional infrastructure security.

Why AI security tools are different:

- Must inspect and filter inputs for malicious prompt injection

- Require monitoring for output anomalies and hallucinations in real time

- Need controls to prevent sensitive data leakage from training sets or inference logs

- Must track and govern model versions to detect unauthorized changes or “shadow models”

- Require integration of security at the model lifecycle level, not just the network or application layer

Related content: Read our guide to AI security solutions

Types of AI security tools

AI security tools are divided into three main types:

Pre-deployment AI security tools

These tools secure AI systems before they are exposed to real users. They identify weaknesses in models, pipelines, and dependencies to reduce the attack surface before launch. Capabilities include model risk assessment, adversarial testing, prompt injection simulation, dependency scanning, and alignment verification. They are often integrated into the CI/CD pipeline to enforce security gates and ensure that only vetted models reach production.

Post-deployment AI security tools

These tools protect AI systems in live environments. They operate at runtime to detect and block malicious inputs, prevent model abuse, and enforce compliance with policies. Common functions include real-time prompt filtering, output moderation, abuse detection, and anomaly monitoring. They may also include response throttling, automated model rollback, and integration with security information and event management (SIEM) platforms.

AI data protection tools

These tools focus on protecting the data used by AI models—during training, fine-tuning, and inference. They implement privacy-preserving techniques such as differential privacy, data masking, and synthetic data generation. In live systems, they scrub sensitive information from retrieval-augmented generation (RAG) pipelines, redact confidential content from logs, and ensure compliance with regulations like GDPR or HIPAA. When AI tools reference external corpora (as in RAG systems), new risks emerge — see our guide to RAG security for securing that architecture.They help prevent both inadvertent leaks and targeted exfiltration attacks.

The following table summarizes the differences between these types:

| Type | Tools | Key Features | Pros and Cons |

|---|---|---|---|

| Pre-Deployment AI Security | Mend.io Robust Intelligence HiddenLayer | Model risk assessment, adversarial input testing, automated security policy checks | Pros: Early detection of model weaknesses, integrates with CI/CD. Cons: Requires model access, may slow release cycles. |

| Post-Deployment AI Security | Prompt Security HiddenLayer AIM Security | Real-time prompt filtering, abuse detection, output moderation | Pros: Stops malicious prompts instantly, protects against jailbreaks. Cons: May generate false positives, adds inference latency. |

| AI Data Protection | Protect AI Arthur.ai Relyance AI | Synthetic data generation, differential privacy, dataset anonymization | Pros: Strong privacy guarantees, preserves data utility. Cons: May reduce model accuracy if over-anonymized. |

Pre-deployment AI security tools

Main focus: Harden AI applications before they go live

From identifying risky AI components to red teaming and prompt protection, these tools help secure models and pipelines before production.

1. Mend.io

Mend.io is an AI native application security platform that secures applications from code to cloud. The platform includes five tools like SCA and SAST, and also Mend AI to secure AI components. Mend AI automatically inventories AI components like AI models agents, MCPs and RAGs, enrich all components with compliance and security information, apply policies and simulate adversarial attacks with AI red teaming. Integrated into CI/CD pipelines, Mend AI brings continuous AI risk management into existing pre-development stages.

Key features:

- AI component inventory: Discovers and catalogs models, datasets, and APIs

- Prompt hardening: Enforces guardrails against injection and manipulation

- AI red teaming: Tests models against adversarial and jailbreak attacks

- Policy governance: Maps risks to compliance and security standards

- End-to-end coverage: Extends AppSec protection across code, open source, and AI

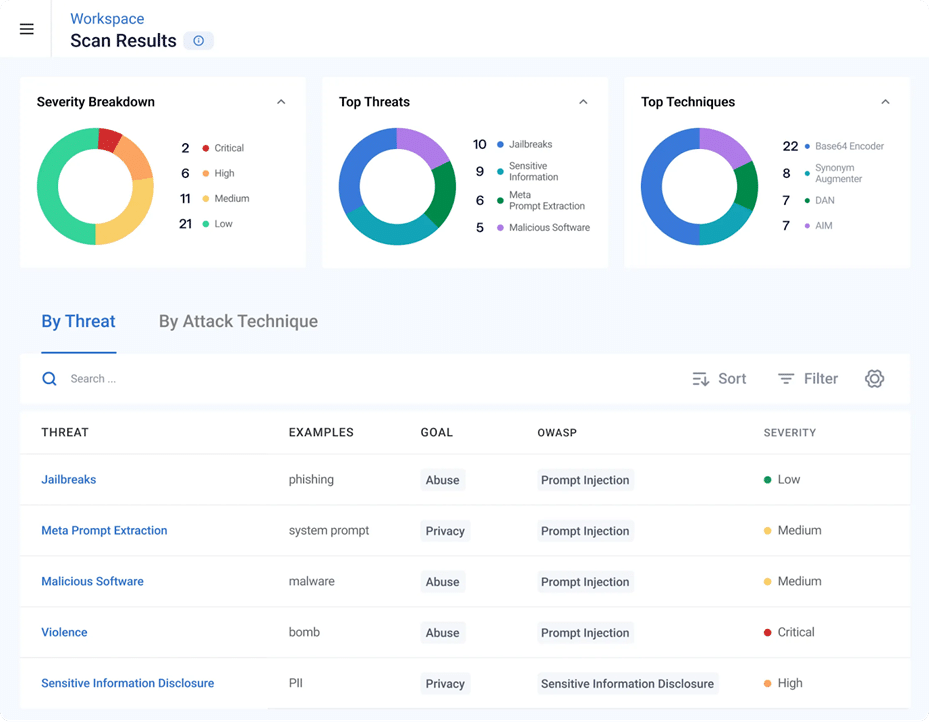

2. Robust Intelligence

Robust Intelligence helps secure AI systems before deployment by automatically scanning models for vulnerabilities. It identifies security and safety risks during development and generates tailored guardrails to mitigate them. The tool integrates with existing CI/CD pipelines and model registries, making it well-suited for continuous testing workflows.

Key features:

- Automated model validation: Scans models, data, and files for vulnerabilities like prompt injection, data poisoning, and hallucinations

- Custom guardrails: Creates protection rules based on identified model weaknesses

- CI/CD and SDK integration: Compatible with pipelines and local development environments

- Security standards mapping: Aligns tests with OWASP, MITRE ATLAS, NIST, and other regulatory frameworks

- Attack coverage: Detects various attack types including role playing, deserialization, instruction and override

Source: Robust Intelligence

3. HiddenLayer

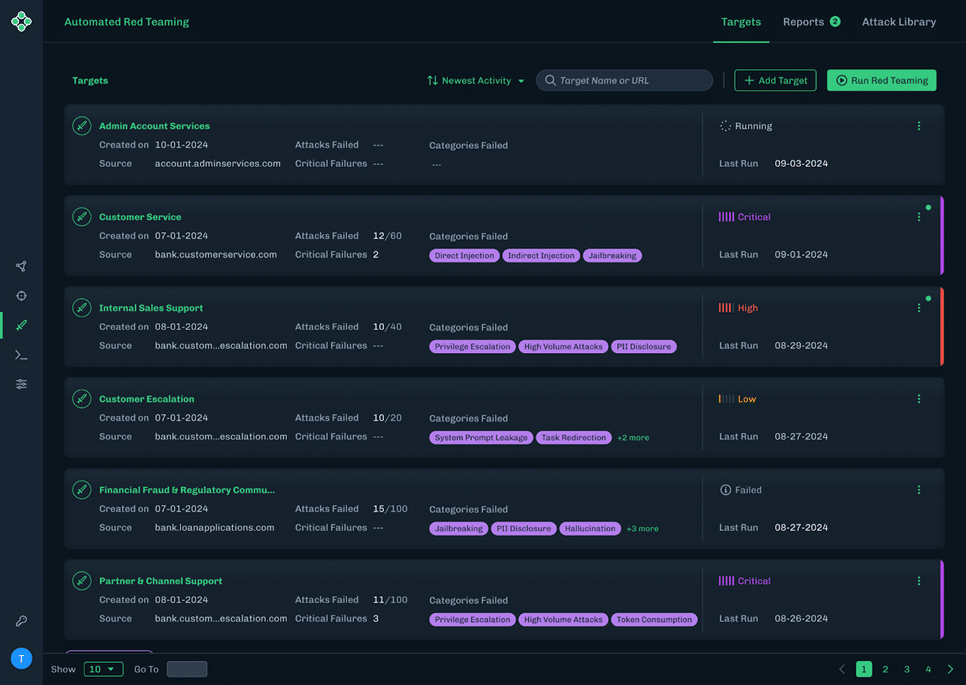

HiddenLayer’s automated red teaming simulates realistic attacks on AI models to uncover security gaps before production. This approach combines expert red team tactics with scalable, automated tools to test systems rapidly and identify vulnerabilities across diverse AI deployments.

Key features:

- Automated red teaming: Runs expert-level simulated attacks to uncover AI-specific vulnerabilities

- Fast setup: Minimal configuration required to launch tests across model endpoints

- OWASP-aligned reports: Provides detailed, compliance-ready documentation of risks and remediations

- Scalability: Supports regular and ad hoc scans across expanding AI systems

- Progress metrics: Tracks posture changes and improvements over time

Source: HiddenLayer

Post-deployment AI security tools

Main focus: Monitor, control, and defend live AI systems

These tools enforce guardrails at runtime to prevent abuse, model exploitation, or policy violations.

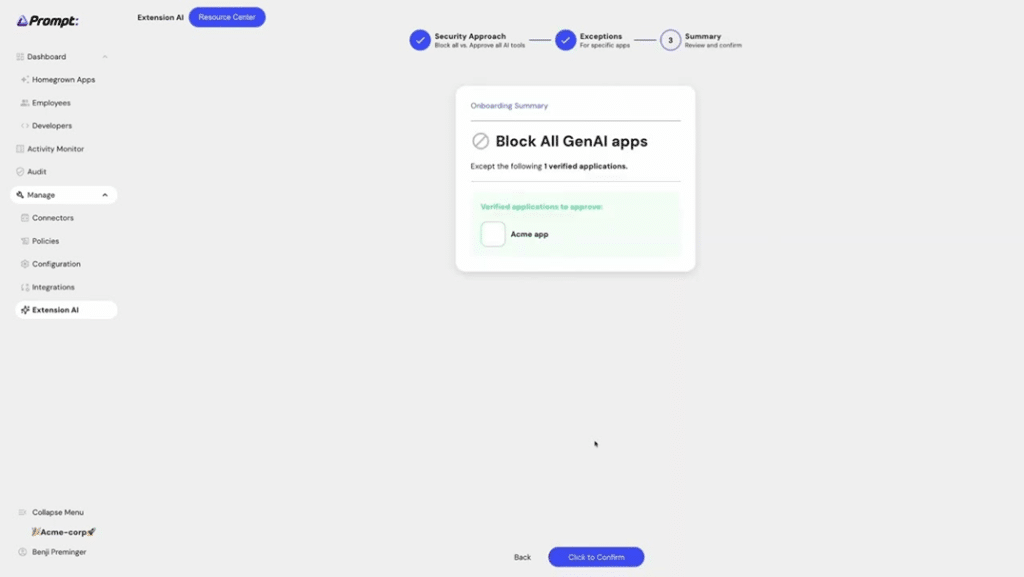

4. Prompt Security

Prompt Security offers runtime protection for homegrown AI applications by filtering sensitive data, moderating output, and detecting risks such as prompt injection and denial-of-wallet attacks. It ensures visibility into all AI interactions and supports a wide range of deployment options.

Key features:

- Prompt injection protection: Prevents prompt manipulation and model abuse in production

- Data leak prevention: Obfuscates and filters PII to meet privacy requirements

- Content moderation: Blocks inappropriate, off-brand, or harmful content

- Traffic logging: Logs all inbound and outbound AI interactions for full visibility

- Flexible deployment: Supports cloud, on-prem, and VPC-hosted environments

Source: Prompt Security

5. HiddenLayer

HiddenLayer’s post-deployment solution monitors AI and agentic systems in real time, detecting threats such as model tampering, PII leaks, and adversarial attacks. It integrates into MLOps pipelines and security frameworks to enable continuous protection aligned with MITRE and OWASP standards.

Key features:

- Real-time detection: Monitors for prompt injection, model theft, and data exfiltration

- MLOps integration: Seamlessly integrates into existing AI development and deployment pipelines

- Compliance alignment: Maps threats to MITRE ATLAS and OWASP Top 10

- Adversarial threat coverage: Protects against inference attacks, excessive agency, and evasion

- Model-agnostic protection: Supports both proprietary and third-party AI models

Source: HiddenLayer

6. AIM Security

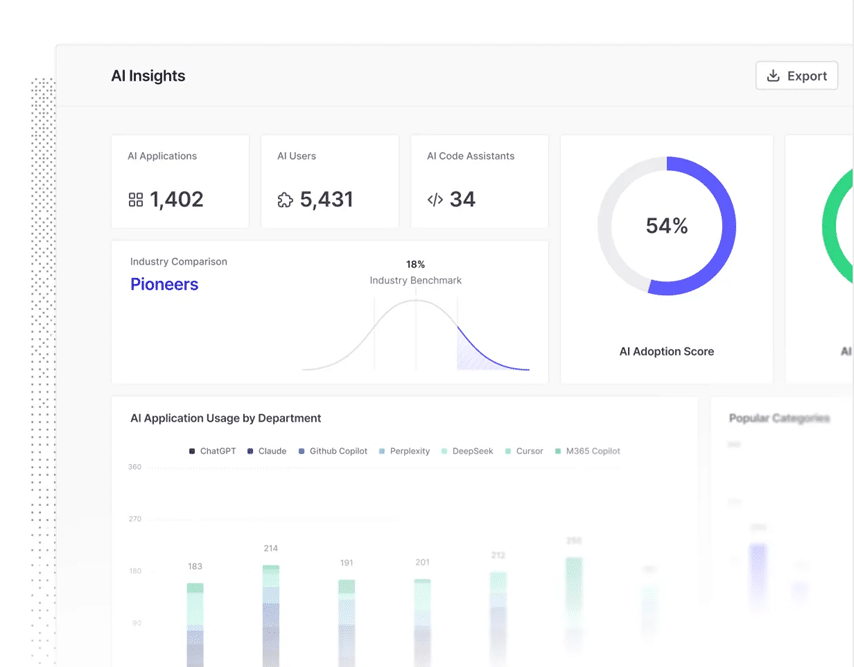

AIM Security provides runtime protection and governance for AI across the full enterprise environment. Its platform secures user interactions, detects misuse, and offers centralized insights into AI usage, helping organizations safely scale their AI adoption.

Key features:

- AI runtime protection: Intercepts and inspects all AI interactions for security violations

- Data security: Prevents leakage of sensitive data to untrusted third-party models

- Governance and compliance: Supports enforcement of AI policies and regulatory requirements

- Attack surface monitoring: Identifies threats like malicious prompts, backdoored models, and remote code execution

- Deployment flexibility: Secures AI in any environment, including chatbots, internal tools, and enterprise LLMs

Source: AIM

AI data protection tools

Main focus: Secure the data that trains, feeds, and interacts with AI

From privacy in training datasets to runtime RAG scrubbing, these tools protect data against leakage, misuse, and compliance failures.

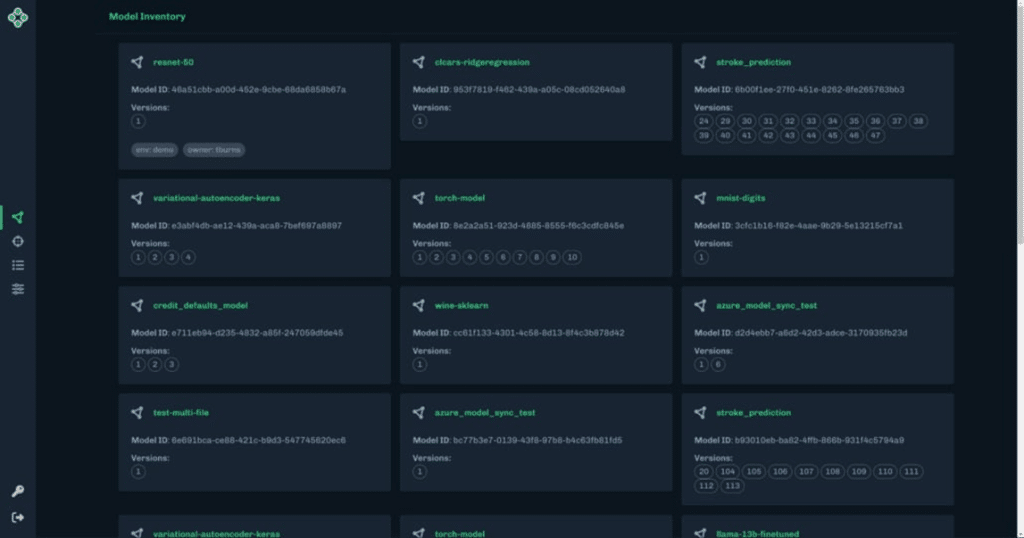

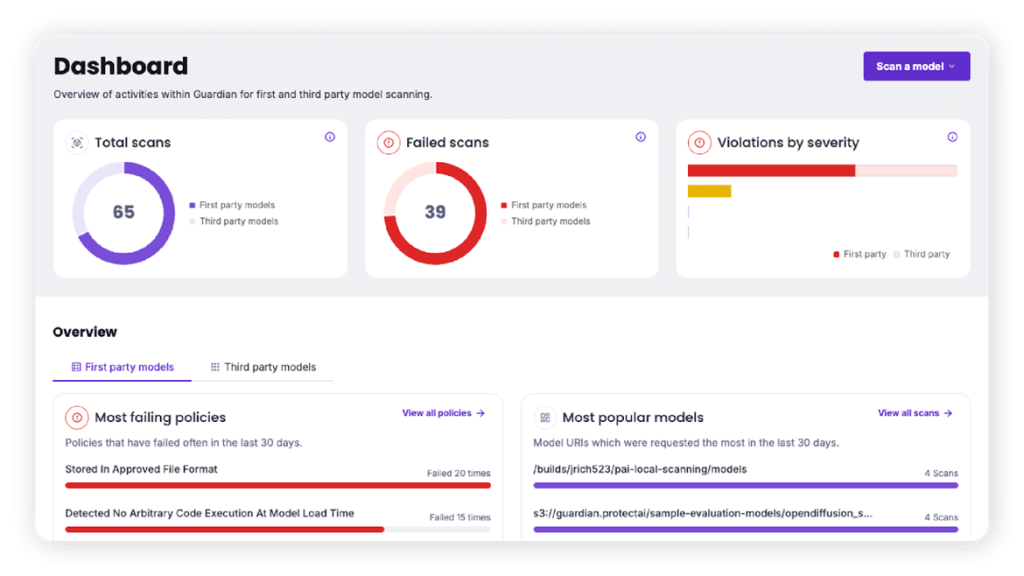

7. Protect AI

Protect AI offers tools to detect and prevent threats during the model serialization process, a vulnerable phase when models are transferred across systems. Its open-source tool, ModelScan, inspects models across multiple formats to identify unsafe code that could lead to data leaks, credential theft, or model poisoning.

Key features:

- Model format support: Scans H5, Pickle, SavedModel, and other common formats

- Serialization attack protection: Detects and prevents malicious code hidden in model files

- Credential and data theft detection: Flags risks of compromised access credentials and data exposure

- Model poisoning checks: Identifies tampered or altered models before deployment

- Open source access: Freely available and integrable into existing workflows

Source: Protect AI

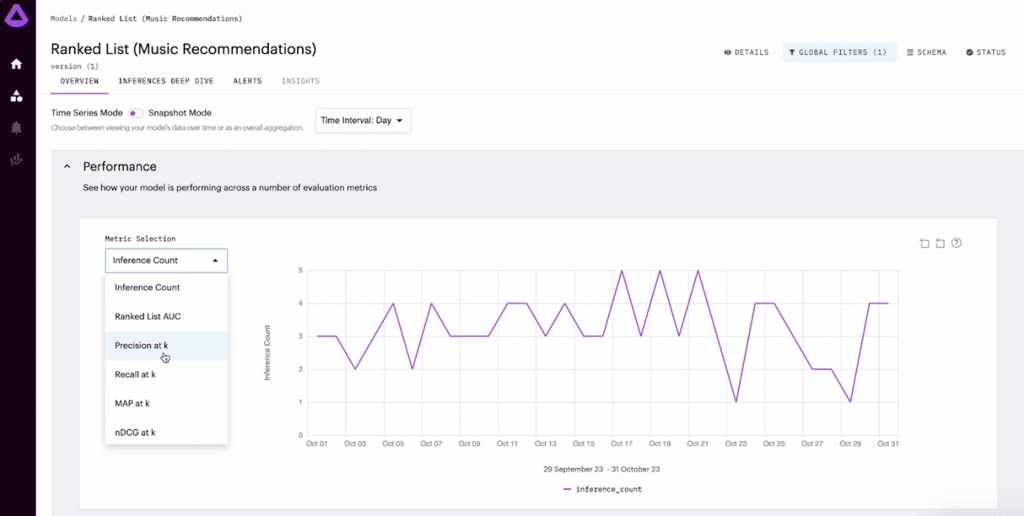

8. Arthur.ai

Arthur.ai provides observability and monitoring across the AI lifecycle, helping organizations detect misuse, track system behavior, and enforce guardrails. It supports evaluations for generative, traditional, and agentic AI, while protecting sensitive data with in-VPC deployment.

Key features:

- Lifecycle monitoring: Tracks performance and risks during pre-production and runtime

- Guardrails for usage: Enforces acceptable use policies and flags off-brand or risky outputs

- Privacy and security controls: Identifies PII, hallucinations, and sensitive content leaks

- In-VPC deployment: Keeps all inference data local, with only anonymized metrics sent externally

- Evaluation support: Measures groundedness, hallucination rates, and prompt relevance

Source: Arthur.ai

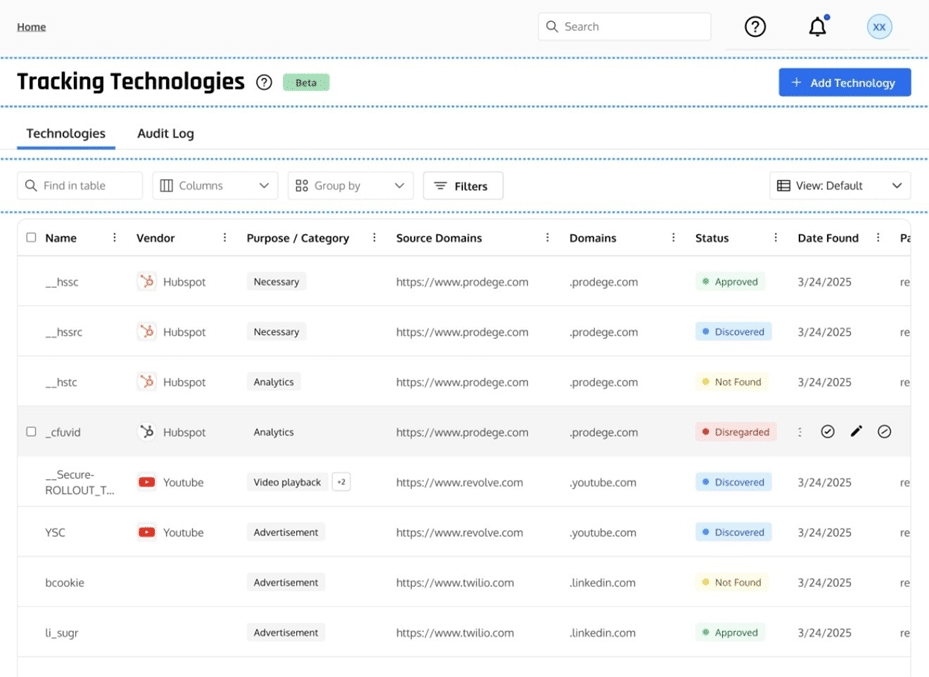

9. Relyance AI

Relyance AI provides a unified platform for managing AI privacy, governance, and compliance in real time. It maps the entire AI footprint across first- and third-party systems, continuously monitors data handling practices, and automates policy enforcement to support regulatory adherence.

Key features:

- AI asset discovery: Automatically maps and inventories all AI systems

- Real-time monitoring: Tracks bias, drift, and compliance issues across the lifecycle

- Policy-as-code enforcement: Embeds dynamic privacy and security checks into workflows

- Continuous compliance: Moves beyond periodic audits with ongoing risk detection

- Audit-ready records: Maintains verifiable logs for governance and trust building

Source: Relyance AI

AI security with Mend.io

AI systems don’t just run models—they ingest sensitive data, evolve via user input, and integrate unpredictable behavior.

Effective AI security stacks span pre-deployment rigor, runtime enforcement, and data governance.

Start with Mend.io to gain visibility into AI in your code, then layer in tools like Prompt Security and Protect AI to stay protected post-deployment.